Local SSDs are an extremely fast hard drive that GCP offers for Compute Engine VMs. But, are kind of a one-time use situation. When the VM is powered down, the drives are cleared and all data is gone. I find that they’re great for instance templates. At work, our use case for using them is to power our Manticore Search instance(s).

Run It By Hand #

While I am a fan of automation, I truly believe the best way to learn is by doing. Make sure you clean up at the end of this and delete VM. For a pleb like me, this VM gets REALLY FUCKING EXPENSIVE, if left running idle.

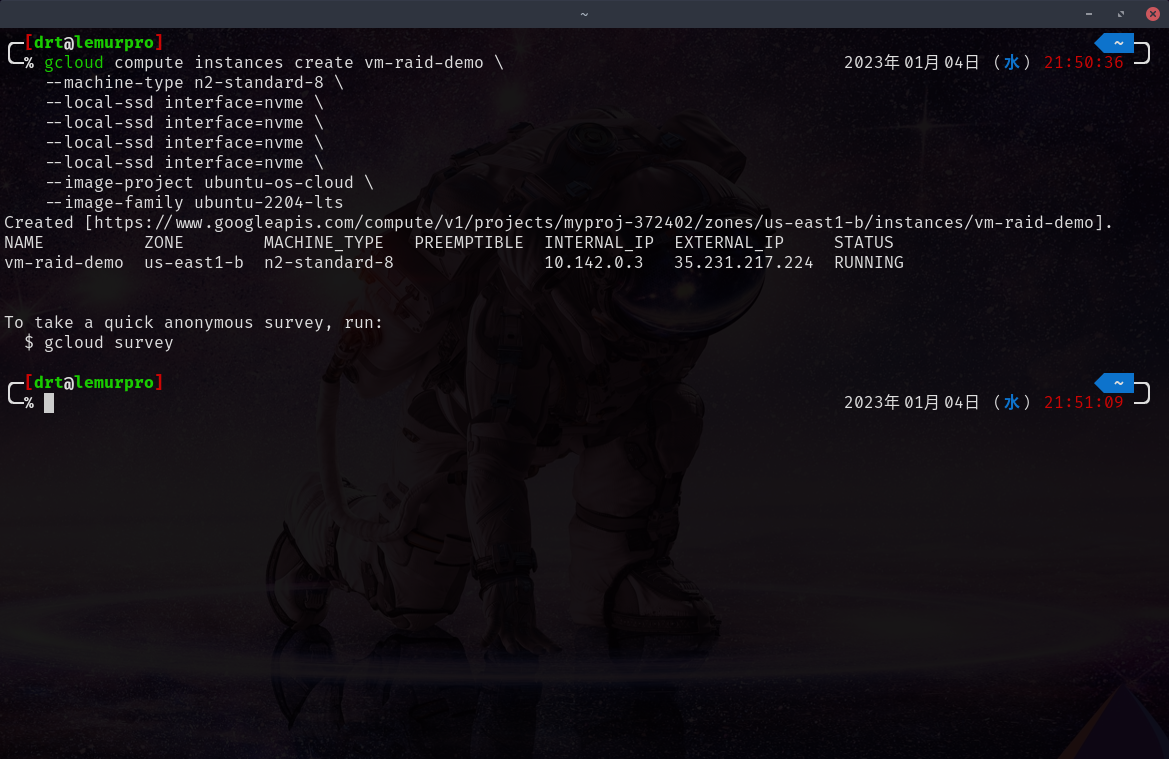

Creating the VM #

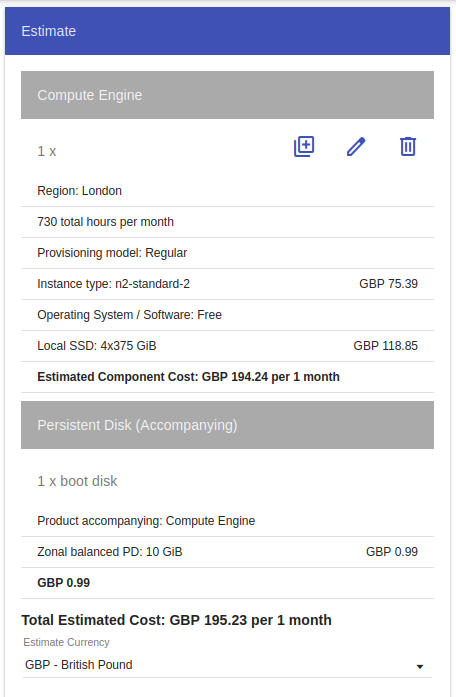

If you only looked at the price in the image above, the following command will create a new Compute Engine VM: N2 general purpose machine with 4 vCPUs and 16GB of RAM.

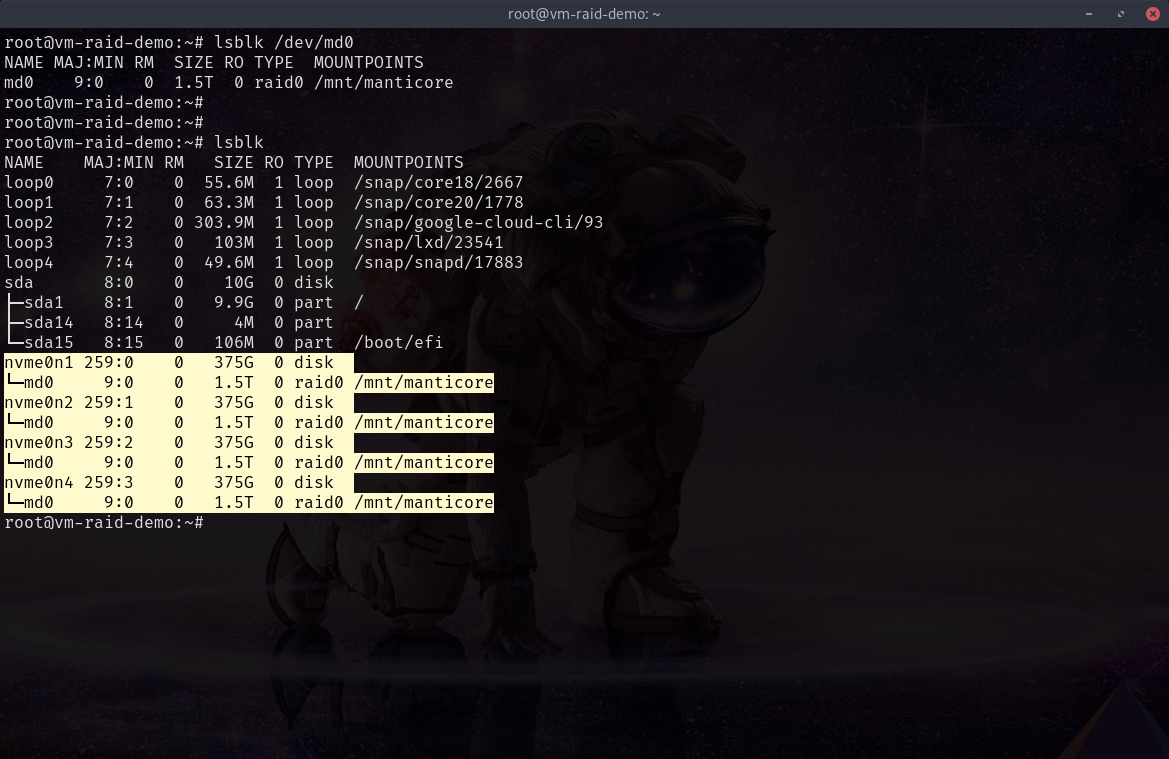

Attached to this machine are four local NVME drives.

Once in RAID 0, it will give the machine an extra 1.5TB of storage.

For the demo, I’m using Ubuntu 22.04 since I know mdadm is stilled by default.

If you use a different OS, YMMV.

gcloud compute instances create vm-raid-demo \

--machine-type n2-standard-8 \

--local-ssd interface=nvme \

--local-ssd interface=nvme \

--local-ssd interface=nvme \

--local-ssd interface=nvme \

--image-project ubuntu-os-cloud \

--image-family ubuntu-2204-lts

Accessing the VM #

Assuming you copy/pasted the previous command, SSH into the box with the following:

gcloud compute ssh vm-raid-demo

Once connected, it’s best to do everything as the root user.

sudo su -

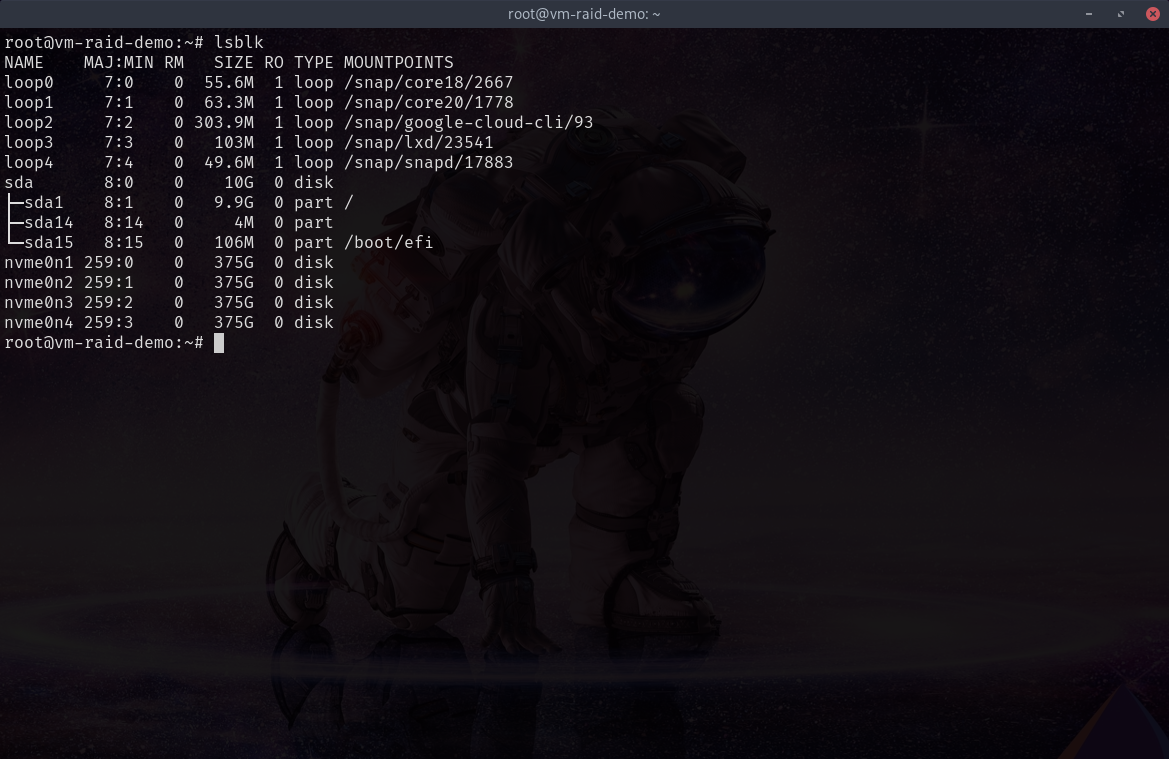

Finding the Drives #

Can use lsblk to view all the drives.

We can filter out the drives to only show NVME drives with grep, and then clear up the output to only show the device path with cut and awk.

lsblk | grep -oE 'nvme[a-z0-9A-Z]*' | cut -d' ' -f1 | awk '{ print "/dev/"$1 }'

# output

# /dev/nvme0n1

# /dev/nvme0n2

# /dev/nvme0n3

# /dev/nvme0n4

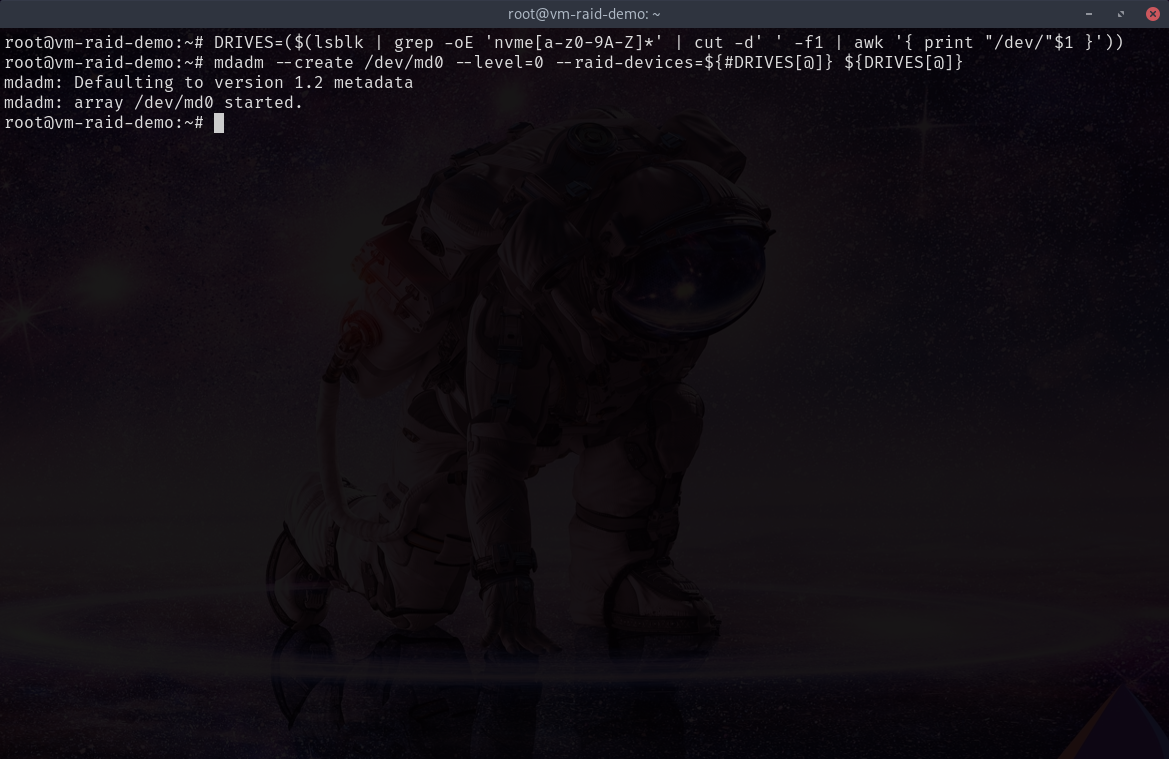

Creating the RAID #

We’ll need to store this in a variable DRIVES to use later

DRIVES=($(lsblk | grep -oE 'nvme[a-z0-9A-Z]*' | cut -d' ' -f1 | awk '{ print "/dev/"$1 }'))

And then use mdadm to create the RAID.

mdadm \

--create /dev/md0 \ # path for new RAID device

--level=0 \ # ensure use RAID 0

--raid-devices=${#DRIVES[@]} \ # prints the length of the array (4)

${DRIVES[@]} # prints all drive paths

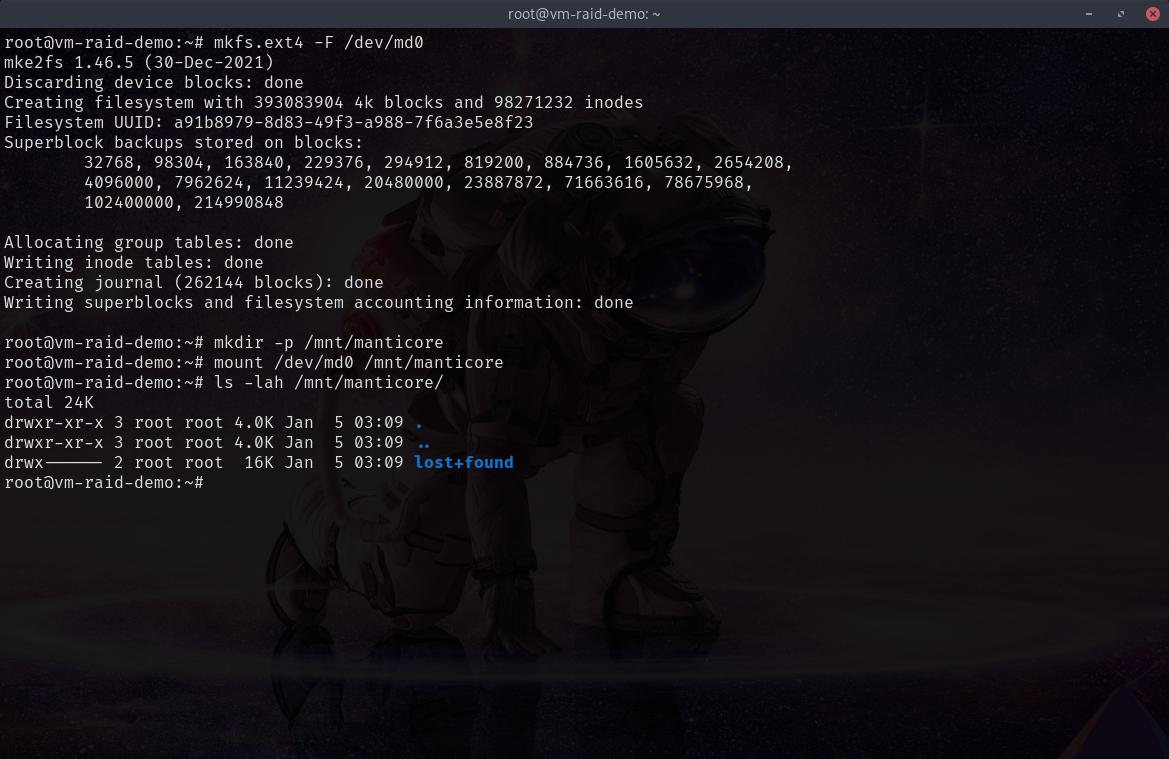

Format and Mount #

The following is an example I use for my work’s use case with Manticore.

Formats the drive to EXT4 and mounts the drive to /mnt/manticore.

mkfs.ext4 -F /dev/md0

mkdir -p /mnt/manticore

mount /dev/md0 /mnt/manticore

ls -lah /mnt/manticore

lost+found directory signaling we have successfully created and mounted and ext4 file system.

/dev/md0

Clean Up #

That’s it! Congrats! Super easy, right?

Now clean up this drive quickly so you don’t get charged anymore money.

gcloud compute instances delete vm-raid-demo

Automate with a VM Start Up Script #

To make a new VM that automatically RAIDs all NVME drives on a system at start up, use the following:

# create local file

cat << EOF > startup.sh

DRIVES=($(lsblk | grep -oE 'nvme[a-z0-9A-Z]*' | cut -d' ' -f1 | awk '{ print "/dev/"$1 }'))

mdadm --create /dev/md0 --level=0 --raid-devices=${#DRIVES[@]} ${DRIVES[@]}

mkfs.ext4 -F /dev/md0

mkdir -p /mnt/manticore

mount /dev/md0 /mnt/manticore

chmod a+w /mnt/manticore

EOF

# create vm

gcloud compute instances create vm-raid-demo \

--machine-type n2-standard-8 \

--local-ssd interface=nvme \

--local-ssd interface=nvme \

--local-ssd interface=nvme \

--local-ssd interface=nvme \

--image-project ubuntu-os-cloud \

--image-family ubuntu-2204-lts \

--metadata-from-file=startup-script=startup.sh

# clean up

rm startup.sh

TL;DR Just Give Me the Code #

DRIVES=($(lsblk | grep -oE 'nvme[a-z0-9A-Z]*' | cut -d' ' -f1 | awk '{ print "/dev/"$1 }'))

mdadm --create /dev/md0 --level=0 --raid-devices=${#DRIVES[@]} ${DRIVES[@]}

mkfs.ext4 -F /dev/md0

mkdir -p /mnt/manticore

mount /dev/md0 /mnt/manticore

chmod a+w /mnt/manticore